Axera’s M.2 Computing Card Drives Edge and Terminal Deployment of Large Models Amid Release of White Paper on the Chinese AI Chip Industry

Recently, the AI Chip & Computility Industry Development Summit and Cloud-to-Edge AI Technology Forum, jointly hosted by the Shenzhen Semiconductor and Integrated Circuit Industry Alliance and eefocus.com, were held at the Shenzhen Convention & Exhibition Center (Futian). At the event, the 2025 White Paper on the Chinese AI Chip Industry was released. This white paper provides a comprehensive analysis of the technological evolution, industry landscape, implementation challenges, and future trends of domestic AI chips, offering cutting-edge insights and decision-making guidance for industry practitioners. Axera was featured as a key enterprise in the report. Meanwhile, Qi Tang, Axera’s Director for AI Inference Engines, was invited to deliver a keynote speech titled “AI-Native Processors: Enabling Large Models to Run on Terminals,” sharing insights on the development trends of large models for edge and terminal applications and Axera’s innovative solutions.

In 2025, large models for edge and terminal applications have experienced explosive growth, with new models released almost monthly. From DeepSeek in January to FireRedLLM and CosyVoice2 in February, Qwen3 and SmolVLM2 in April, Qwen2.5-VL in May, MiniCPM4 in June, SmolLM3 in July, InternVL3.5 in August, and VoxCPM in September, the pace of model iteration continues to accelerate.

Against this backdrop, industry applications including NVR intelligent upgrades, NAS intelligent transformation, offline real-time translation, voice cloning, home intelligent hubs, intelligent cockpit environmental perception, and embodied intelligent robots have all shown strong demand for local offline operation of multimodal large models, driving continuous improvements in algorithmic accuracy and user experience.

Axera M.2 Computing Card:

Plug-and-Play, Efficiently Empowering Edge and Terminal AI

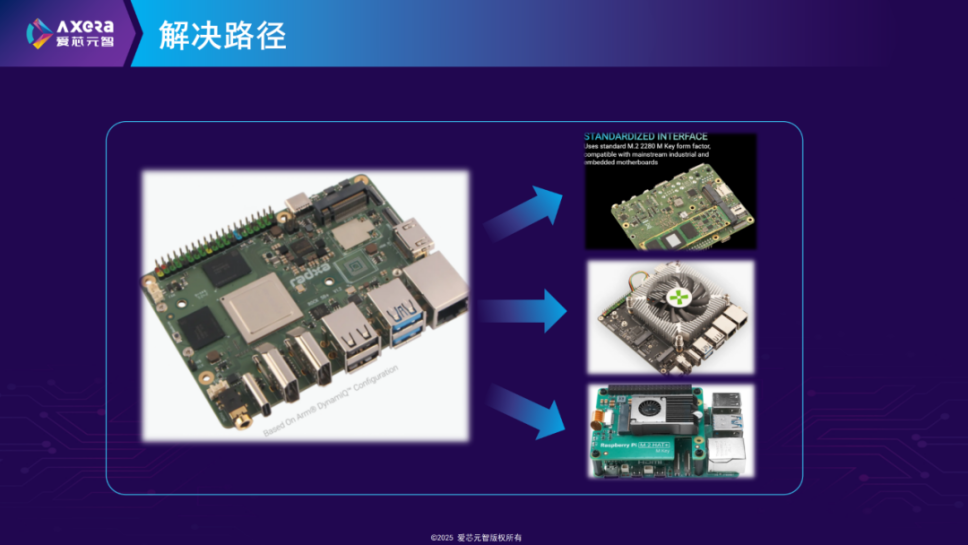

Addressing pain points in large model deployment on edge and terminal sides such as high main control replacement costs, large bandwidth consumption, and impact on core business stability, Axera has launched an M.2 2242/2280 standard interface computing card based on AX8850, with power consumption under 8W, providing efficient computing expansion for existing devices through a “plug-and-play” approach. This solution supports scaling from M.2 cards to PCIe cards, enabling straightforward upgrade transformations and significantly lowering deployment barriers.

Plug-and-Play

M.2 2242/2280 Standard Interface Computing Card Based on AX8850

Support Expansion from M.2 Card to PCIE Card

Currently, this computing card has been adapted to various hardware platforms, including Raspberry Pi 5, RK3588, and Intel industrial computers, and comes with supporting community boards, including MaixCAM-HAT, AX-M1, and LLM8850, offering comprehensive advantages of compact size, strong performance, low power consumption, and high cost-effectiveness.

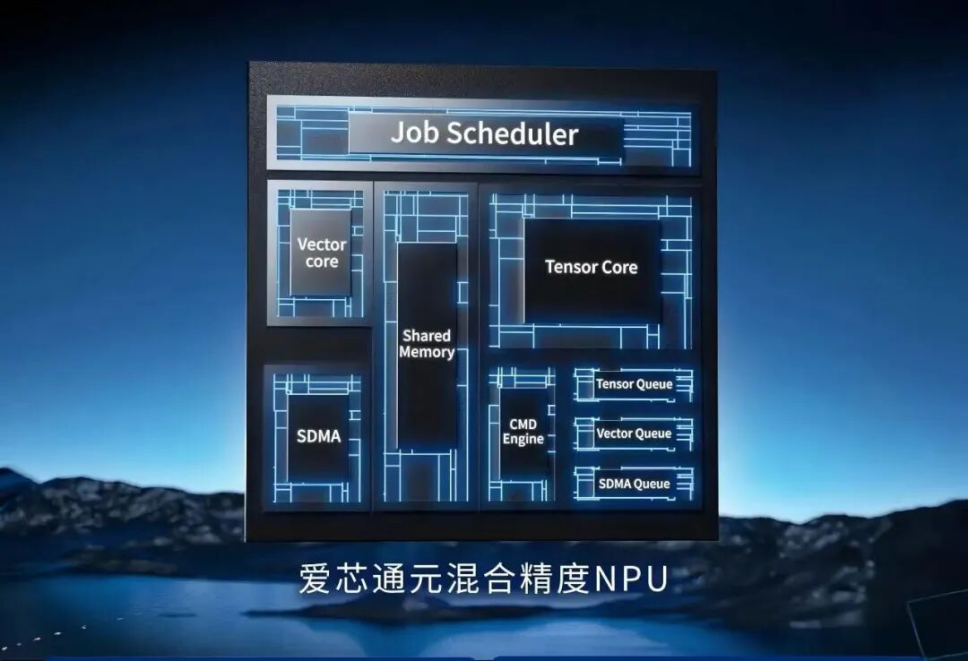

At the core chip level, Axera has launched the Axera Edge AI Chips featuring the proprietary AXNeutron AI processor, designed specifically for edge and terminal AI applications. The chip employs a combined approach using operator instruction set and dataflow DSA microarchitecture, achieving an optimal balance of performance, power consumption, and area.

Its operator instruction set supports over 100 AI operators, including Conv, Transformer, and LSTM, covering image, video, text, and multimodal tasks, with native adaptation to mainstream large model architectures such as DeepSeek, Qwen, and Llama. The heterogeneous multi-core architecture integrates efficient tensor cores, flexible vector cores, and high-bandwidth data engines, supporting 4-bit to 32-bit mixed precision computing. Combined with a high-performance hardware multi-core scheduler, it optimizes data dependencies and significantly improves computing utilization efficiency. The mature toolchain ecosystem provides full-process support from model quantization and compilation optimization to deployment, compatible with mainstream frameworks including PyTorch, TensorFlow, and ONNX, greatly enhancing development efficiency.

Currently, Axera’s AI processors have achieved full computing power coverage across high, medium, and low-end segments and reached large-scale mass production. Their energy efficiency delivers a 10x improvement over traditional GPGPUs, providing customers with more cost-effective computing infrastructure for edge and terminal applications, including text-to-image search, general detection, image-to-text generation, and AI agents.

Thanks to its M.2 computing cards and AI-native processors, Axera has achieved technological implementation across multiple scenarios, including text search large models and Frigate NVR for NVR intelligent upgrades, Immich intelligent photo albums for NAS intelligent upgrades, as well as diverse examples including video analysis, Stable Diffusion image generation, video super-resolution and interpolation, meeting transcription, and voice cloning, demonstrating broad product applicability and open-source ecosystem vitality.

Axera will continue to leverage AI-native processors and flexible computing solutions to drive efficient, economical, and environmentally-friendly deployment of large models at the edge and terminal, building an edge and terminal intelligence community to fulfill its corporate mission of “AI for All, AI for a Better World.”